Statutory warning It s a long read.

I start by sharing I regret, I did not hold onto the

Budget and Economics 101 blog post for one more day. I had been holding/thinking on to it for almost couple of weeks before posting, if I had just waited a day more, I would have been able to share an Indian Express

story . While I thought that the work for the budget starts around 3 months before the budget, I came to learn from that article that it takes 6 months. As can be seen in the article, it is somewhat of a wasted opportunity, part of it probably due to the Government (irrespective of any political party, dynasty etc.) mismanagement.

What has not been stated in the article is what I had shared earlier, reading between the lines, it seems that the Government isn t able to trust what it hears from its advisers and man on the street. Unlike

Chanakya and many wise people before him who are credited with advising about good governance, that a good king is one who goes out in disguise, learns how his/er subjects are surviving, seeing what ills them and taking or even not taking corrective steps after seeing the problem from various angles. Of course it s easier said then done, though lot of Indian kings did try and ran successful provinces. There were also some who were more interested in gambling, women and threw/frittered away their kingdoms.

The 6-month things while not being said in the Express article is probably more about checking and re-checking figures and sources to make sure they are able to read whatever pattern the various Big Businesses, Industry, Social Welfare schemes and people are saying I guess. And unless mass digitalization as well as overhaul of procedures, Right to Information (RTI) happens, don t see any improvement in the way the information is collected, interpreted and shared with the public at large.

It would also require people who are able to figure out how things work sharing the inferences (right or wrong) through various media so there is discussion about figures and policy-making. Such researchers and their findings are sadly missing in Indian public discourses and only found in glossy coffee table books :(.

One of the most basic question for instance is, How much of any policy should be based on facts and figures and how much giving fillip to products and services needed in short to medium term ?

Also how much morality should play a part in Public Policy ?

Surprisingly, or probably not, most Indian budgets are populist by nature with some scientific basis but most of the times there is no dialog about how the FM came to some conclusion or Policy-making. I am guessing a huge part of that has also to do with basic illiteracy as well as Economic and Financial Illiteracy.

Just to share a well-known world-over example, one of the policies where the Government of India has been somewhat lethargic is wired broadband penetration. As have shared umpteen times, while superficially broadband penetration is happening, most of the penetration is the unreliable and more expensive mobile broadband penetration.

While this may come as a shock to many of the users of technology, BSNL, a Government company who provides broadband for almost 70-80% of the ADSL wired broadband subscribers

gives 50:1 contention ratio to its customers.

One can now understand the pathetic speeds along with very old copper wiring (20 odd years) on which the network is running. The idea/idiom of running network using duct-tape seems pretty apt in here

Now, the Government couple of years ago introduced

FFTH Fiber-to-the-home but because the charges are so high, it s not going anywhere. The Government could say 10% discount in your Income Tax rates if you get FFTH. This would force people to get FFTH and would also force BSNL to clean up its act. It has been

documented that a percentage increase in broadband equals a similar percentage rise in GDP.

Having higher speeds of broadband would mean better quality of streaming video as well as all sorts of remote teaching and sharing of ideas which will give a lot of fillip to all sorts of IT peripherals in short, medium and long-term as well. Not to mention, all the software that will be invented/coded to take benefit of all that speed.

Although, realistically speaking I am cynical that the Government would bring something like this

Moving on

Another interesting story which I had shared was a bit about

World History

Now the Economist sort of

confirmed how things are in Pakistan. What is and was interesting that the article is made by a politically left-leaning magazine which is for globalization, business among other things .

So, there seem to be only three options, either I and the magazine are correct or we both are reading it wrong.

The third and last option is that the United States realize that Pakistan can no longer be trusted as Pakistan is siding more and more with Chinese and Russians, hence the article. Atlhough it seems a somewhat far-fetched idea as I don t see the magazine getting any brownie points with President Trump. Unless, The Economist becomes more hawkish, more right-wingish due to the new establishment.

I can t claim to have any major political understanding or expertise but it does seem that Pakistan is losing friends. Even UAE have been

cautiously building bridges with us. Now how this will play out in the medium to long-term depends much on the personal equations of the two heads of state, happenings in geopolitics around the world and the two countries, decisions they take, it is a welcome opportunity as far they (the Saudis) have funds they want to invest and India can use those investments to make new infrastructure.

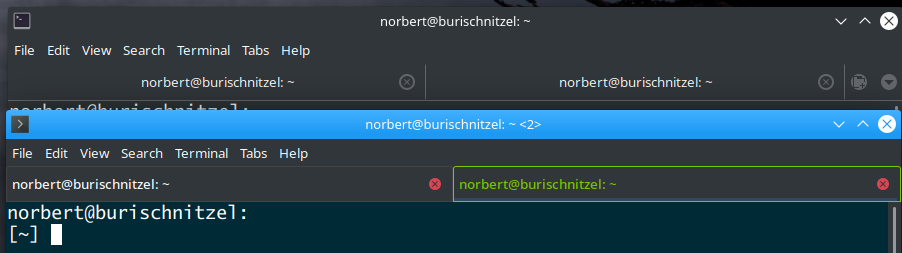

Now, I need a bit of help of Java and VCS (Version control system) experts . There is a small game project called

Mars-Sim. I asked probably a few more questions than I should have and the result was that I was made a

member of the game team even though I had shared with them that I m a non-coder.

I think such a game is important as it s foss. Both the game itself is

foss as well as its

build-tools with a basic

wiki. Such a game would be useful not only to Debian but all free software distributions.

Journeying into the game

Unfortunately, the game as it is

currently, doesn t work with openjdk8 but private conversations with the devs. have shared they will work on getting it to work on OpenJDK 9 which though is sometime away.

Now as it is a game, I knew it would have multiple multimedia assets. It took me quite sometime to figure out where most of the multimedia assets are.

I was shocked to find that there aren t any tool/s in Debian as well a GNU/Linux to know about types of content is there inside a directory and its sub-directories.

I framed it in a query and found a script as an

answer . I renamed the script to file-extension-information.sh (for lack of imagination of better name).

After that, I downloaded a snapshot of the head of the project from

https://sourceforge.net/p/mars-sim/code/HEAD/tree/ where it shows a link to download the snapshot.

https://sourceforge.net/code-snapshots/svn/m/ma/mars-sim/code/mars-sim-code-3847-trunk.zip

unzipped it and then ran the script on it

[$] bash file-extension-information.sh mars-sim-code-3846-trunk

theme: 1770

dtd: 31915

py: 10815

project: 5627

JPG: 762476

fxml: 59490

vm: 876

dat: 15841044

java: 13052271

store: 1343

gitignore: 8

jpg: 3473416

md: 5156

lua: 57

gz: 1447

desktop: 281

wav: 83278

1: 2340

css: 323739

frag: 471

svg: 8948591

launch: 9404

index: 11520

iml: 27186

png: 3268773

json: 1217

ttf: 2861016

vert: 712

ogg: 12394801

prefs: 11541

properties: 186731

gradle: 611

classpath: 8538

pro: 687

groovy: 2711

form: 5780

txt: 50274

xml: 794365

js: 1465072

dll: 2268672

html: 1676452

gif: 38399

sum: 23040

(none): 1124

jsx: 32070

It gave me some idea of what sort of file were under the repository. I do wish the script defaulted to showing file-sizes in KB if not MB to better assess how the directory is made up but not a big loss .

The above listing told me that at the very least theme, JPG, dat, wav, png, ogg and lastly gif files.

For lack of better tools and to get an overview of where those multimedia assets used ncdu

[shirish@debian] - [~/games/mars-sim-code-3846-trunk] - [10210]

[$] ncdu mars-sim/

--- /home/shirish/games/mars-sim-code-3846-trunk/mars-sim --------------------------------------------------------------------------------------

46.2 MiB [##########] /mars-sim-ui

15.2 MiB [### ] /mars-sim-mapdata

8.3 MiB [# ] /mars-sim-core

2.1 MiB [ ] /mars-sim-service

500.0 KiB [ ] /mars-sim-main

188.0 KiB [ ] /mars-sim-android

72.0 KiB [ ] /mars-sim-network

16.0 KiB [ ] pom.xml

12.0 KiB [ ] /.settings

4.0 KiB [ ] mars-sim.store

4.0 KiB [ ] mars-sim.iml

4.0 KiB [ ] .project

I found that all the media is distributed randomly and posted a ticket about

it. As I m not even a java newbie, could somebody look at mokun s

comment and help out please ?

On the same project, there has been talk of

migrating to github.com

Now whatever little I know of git, it makes a copy of the whole repository under .git/ folder/directory so having multimedia assets under git is a bad, bad idea, as each multimedia binary format file would be unique and no possibility of diff. between two binary files even though they may be the same file with some addition or subtraction from earlier version.

I did file a

question but am unhappy with the answers given. Can anybody give some definitive answers if they have been able to do how I am proposing , if yes, how did they go about it ?

And lastly

America was

founded by immigrants. Everybody knows the story about American Indians, the originals of the land were over-powered by the European settlers. So any claim, then and now that immigration did not help United States is just a lie.

This came due to a conversation on #debconf by andrewsh

[18:37:06] I d be more than happy myself to apply for an US tourist not transit visa when I really need it, as a transit visa isn t really useful, is just as costly as a tourist visa, and nearly as difficult to get as a tourist visa

[18:37:40] I m not entirely sure I wish to transit through the US in its Trumplandia incarnation either

[18:38:07] likely to be more difficult and unfun

FWIW I am in complete agreement with Andrew s assessment of how it might be with foreigners. It has been on my mind and thoughts for quite some time although andrewsh put it eloquently.

But as always I m getting ahead of myself.

The conversation is because debconf this year would be in Canada. For many a cheap flight, one of the likely layovers/stopover can be the United States.

I actually would have gone one step further, even if it was cheap transit visa, it would equally be unfun as it would discriminate.

About couple of years back, a friend of mine while explaining what visa is, put it rather succinctly

the visa officer looks at only 3 things

a. Your financial position something which tells that you can take care of your financial needs if things go south

b. You are not looking to settle there unlawfully

c. You are not a criminal.

While costs do matter, what is disturbing more is the form of extremism being displayed therein. While Indians from the South Asian continent in US have been largely successful, love to be in peace (one-off incidents do and will happen anywhere) if I had to take a transit or tourist visa in this atmosphere, it would leave a bad taste in the mouth.

When one of my best friends is a Muslim, 20% of the population in India is made of Muslims and 99% of the time both of us co-exist in peace I simply can t take any alternative ideology. Even in

Freakonomics 2.0 the authors when they shared that it s less than 0.1 percent of Muslims who are engaged in terrorist activities, if they were even 1 percent than all the world s armed forces couldn t fight them and couldn t keep anyone safe. Which simply means that 99.99% of even all Muslims are good.

This resonates strongly with me for number of reasons. One of my uncles in early to late 80 s had an opportunity for work to visit Russia for official work. He went there and there were Secret Police after him all the time. While he didn t know it, I later read it, that it was SOP (Standard Operating Procedure) when all and any foreigners came visiting the country, and not just foreigners, they had spies for their own citizens.

Russka a book I read several years ago explained the paranoia beautifully.

While U.S. in those days was a more welcoming place for him.

I am thankful as well as find it strange that Canada and States have such different visa procedures. While Canada would simply look at the above things, probably discreetly inquire about you if you have been a bad boy/girl in any way and then make a decision which is fine. For United States, even for a transit visa I probably would have to go to Interview where my world view would probably be in conflict with the current American world view.

Interestingly, while I was looking at conversations on the web and one thing that is missing there is that nobody has talked about intelligence community. What Mr. Trump is saying in not so many words is that our intelligence even with all the e-mails we monitor and everything we do, we still can t catch you. It almost seems like giving a back-handed compliment to the extremists saying you do a better job than our intelligence community.

This doesn t mean that States doesn t have interesting things to give to the world, Star Trek conventions, Grand Canyon (which probably would require me more than a month or more to explore even a little part), NASA, Intel, AMD, SpaceX, CES (when it s held) and LPC (Linux Plumber s conference where whose who come to think of roadmap for GNU/Linux). What I wouldn t give to be a fly in the wall when LPC, CES happens in the States.

What I actually found very interesting is that in the current Canadian Government, if what I

read and heard is true, then Justin Trudeau, the Prime Minister of Canada made 50 of his cabinet female. Just like in the article, studies even in Indian parliament have shown that when women are in power, questions about social justice, equality, common good get asked and policies made. If I do get the opportunity to be part of debconf, I would like to see, hear, watch, learn how the women cabinet is doing things. I am assuming that reporting and analysis standards of whatever decisions are more transparent and more people are engaged in the political process to know what their elected representatives are doing.

One another interesting point I came to know is that Canada is home to bicycling

paths. While I stopped bicycling years ago

as it has been becoming more and more dangerous to bicycle here in Pune as there is no demarcation for cyclists, I am sure lot of Canadians must be using this opportunity fully.

Lastly, on the debconf preparation stage, things have started becoming a bit more urgent and hectic. From a monthly IRC meet, it has now become a weekly meet. Both the

wiki and the website are slowly taking up shape.

http://deb.li/dc17kbp is a nice way to know/see progress of the activities happening .

One important decision that would be taken today is where people would stay during debconf. There are options between on-site and two places around the venue, one 1.9 km around, the other 5 km. mark. Each has its own good and bad points. It would be interesting to see which place gets selected and why.

Filed under:

Miscellenous Tagged:

#budget,

#Canada,

#debconf organization,

#discrimination,

#Equal Opportunity,

#Fiber,

#svn,

#United States,

#Version Control,

Broadband,

Git,

Pakistan,

Subversion

Float/String Conversion in Picolibc

Exact conversion between strings and floats seems like a fairly

straightforward problem. There are two related problems:

Float/String Conversion in Picolibc

Exact conversion between strings and floats seems like a fairly

straightforward problem. There are two related problems:

It has been now about two weeks that I switched to KDE/Plasma on all my desktops, and to my big surprise, that went much more smooth than I thought. There are only a few glitches with respect to the gtk3 part of the Breeze Dark theme I am using, which needed fixup.

It has been now about two weeks that I switched to KDE/Plasma on all my desktops, and to my big surprise, that went much more smooth than I thought. There are only a few glitches with respect to the gtk3 part of the Breeze Dark theme I am using, which needed fixup. Tab distinction

As I wrote already in a

Tab distinction

As I wrote already in a  So recently I was in York at the Bytemark office, and I read a piece about

So recently I was in York at the Bytemark office, and I read a piece about  I have been working with STM32 chips on-and-off for at least eight, possibly

closer to nine years. About as long as ST have been touting them around. I

love the STM32, and have done much with them in C. But, as my

I have been working with STM32 chips on-and-off for at least eight, possibly

closer to nine years. About as long as ST have been touting them around. I

love the STM32, and have done much with them in C. But, as my

Besides the huge amount of package updates due to the 2 month testing hiatus, I want to pick a few changes before copying the complete changelog entry:

Additional TEXMF trees

tlmgr got a new functionality to easily add and remove additional TEXMF trees to the search path. MikTeX had this features since long, and it was often requested. As it turned out, it is a rather trivial thing to achieve by some texmf.cnf lines. The rest is just front end in tlmgr. Here a few invocations (not very intelligent usage, though):

Besides the huge amount of package updates due to the 2 month testing hiatus, I want to pick a few changes before copying the complete changelog entry:

Additional TEXMF trees

tlmgr got a new functionality to easily add and remove additional TEXMF trees to the search path. MikTeX had this features since long, and it was often requested. As it turned out, it is a rather trivial thing to achieve by some texmf.cnf lines. The rest is just front end in tlmgr. Here a few invocations (not very intelligent usage, though):

Statutory warning It s a long read.

I start by sharing I regret, I did not hold onto the

Statutory warning It s a long read.

I start by sharing I regret, I did not hold onto the Now, the Government couple of years ago introduced

Now, the Government couple of years ago introduced  Another interesting story which I had shared was a bit about

Another interesting story which I had shared was a bit about  America was

America was  One another interesting point I came to know is that Canada is home to bicycling

One another interesting point I came to know is that Canada is home to bicycling

My plan for

My plan for